Operationalising an AIMM in the Public Sector

- Juhani Lemmik

- Jun 5, 2024

- 3 min read

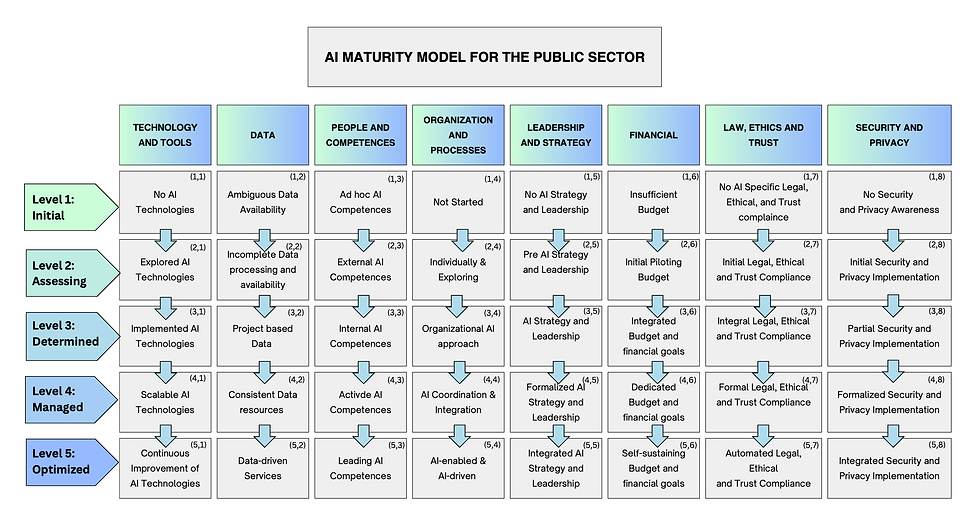

I successfully defended my Master's thesis titled "Operationalising an AIMM in the Public Sector. Design Science Approach" earlier this week (May 3). The AIMM that we created with my supervisor Richard Dreyling was the first of its kind designed for public organisations to be applicable in practice. Based on the basic structure of the model (see the figure below), I needed to design maturity level descriptions that would capture the elements relevant at that level under the eight dimensions of the model. I transferred those into the SurveySparrow online survey tool and accompanied the AIMM with a Methodological Guideline to assist the participants of the self-assessment exercise.

Statistics Estonia had agreed to participate in the exercise and six organisation members were selected based on the predefined profiles to represent key roles in developing and deploying AI solutions. According to the information on the kratid.ee website, SE has been involved in three AI projects: chatbot development with a private partner (2018, finished), creation of an enterprise vitality index and a tool (2022, partnering with the Ministry of Economic Affairs and Communications, ongoing), and designing a prototype for entrepreneur’s early warning service (2022, partnering with the MEAC, ongoing). The Department of Experimental Statistics is the hub for everything AI. The Department uses different statistical methods, such as random forest, neural networks, and regression models (Lee, 2023). They have also trained other departments in writing analysis code in R-language.

I collected feedback from experts and the SA on the usefulness and other aspects of the model and the process. It received an average rating of 2.5 on the 1-5 point Likert scale. Not terrible but certainly not very encouraging either. A key deficiency was that before sending out the feedback questionnaire, I had not yet delivered the report to the SA and the participants were probably wondering about the usefulness of the exercise. Normally, after delivering the report, there could also be a consensus meeting to discuss it and if the management so decides, an improvement plan would be drawn up to eliminate some of the weaknesses.

Another proposition based on the feedback is that the descriptions of the maturity levels have to be improved. Consisting of several elements, they were cumbersome to tell apart from one another. Also, it is not certain that they are equally well applicable to any kind of public organisation, based on what AI capabilities they have in-house and what they procure from the outside. This upgrade requires meticulous attention to the way these elements are assembled together from the perspective of their relevance, the right level of aspiration, and the language.

Finally, although the AIMM and its dimensions were broadly approved by the experts, there are certainly possibilities to improve them. For example, the model could capture the need for stakeholder engagement better. This can be integrated into existing descriptions of maturity levels or accomplished by expanding the model by one more dimension. Second, there should be more attention paid to the option of off-the-shelf AI solutions, because these are easy and cost-efficient to implement.

As presented above, there are plenty of ways to take this research further. It will be quite a demanding exercise to drive this AIMM to the point where it can be actually used for self-assessment purposes in public organisations. The journey continues.

Source: Dreyling et al. (2024), forthcoming

Comments